LLM's peaking?

One final article on actual LLM performance which is interesting. Then I need to do something else with my Sunday.

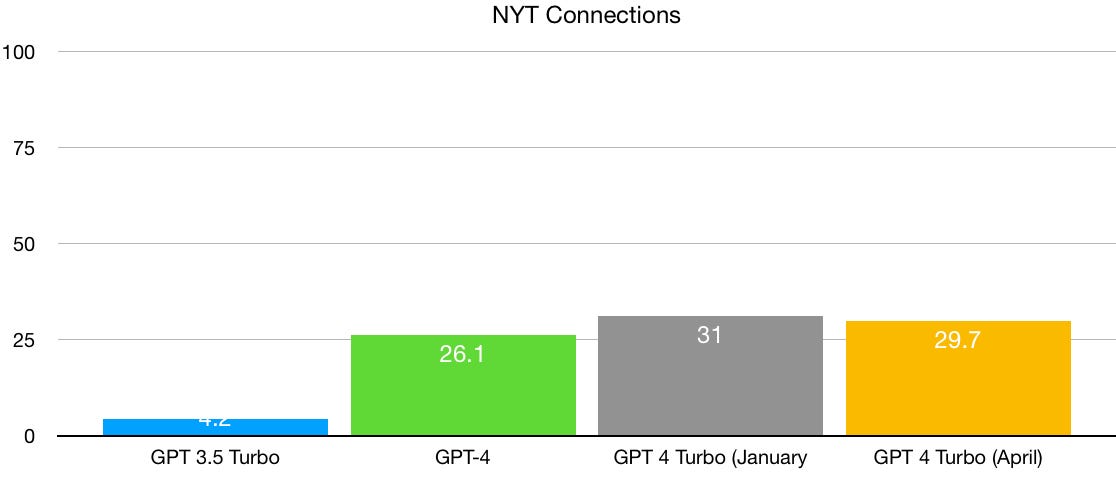

Evidence that LLMs are reaching a point of diminishing returns - and what that might mean

The conventional wisdom, well captured recently by Ethan Mollick, is that LLMs are advancing exponentially. A few days ago, in very popular blog post, Mollick claimed that “the current best estimates of the rate of improvement in Large Language models show capabilities doubling every 5 to 14 months”:

Market capitalisation of some of these companies is an interesting point. Because if ‘the future’ doesn’t actually work out then there will be a reset. Which is normally painful. 100x plus valuations are dependent on the technology advances that are ‘coming soon’. Fair enough, you need a bit of leeway for innovation.

Still, I look at certain organisations and valuations in the EV market to consider similar parallels. You can’t keep promising without delivering forever.

Because at the end of the day - customers have to buy what you’re selling at the price where they are happy and you make money.