LLM Quality degradation and collapse

Some interesting links for more technical pals. Any discussion probably better at a roundtable/conference as I can’t find answers. Or maybe there are clever people to follow?

Thinking in terms of Systems Development Life Cycle (SDLC) - so we’re in risk management territory not software development…you can’t hand it over and rub your hands clean. Cradle to grave. Support, monitoring and maintenance.

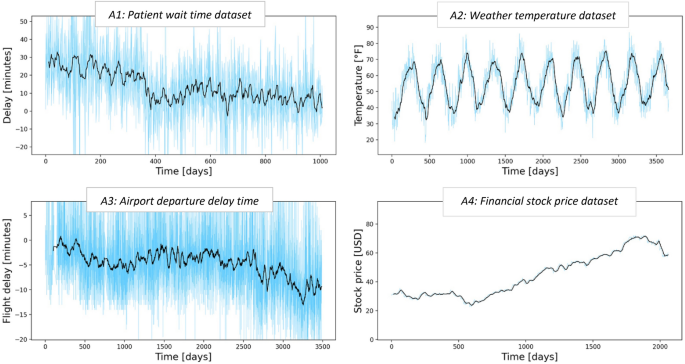

AI Model Temporal Quality Degradation - layman’s terms? You can’t set-up a model and just leave it. It goes a bit loopy over time

AI Model Collapse - layman’s terms? If models start ingesting other generated data that can lead to collapse (a bit like the Habsburgs)

There’s a bit more coverage on that last one. A more digestible explanation here

Anything more recent is just ‘Oh no the internet is going to run out of content’

Which is not that helpful if you’re interested in risk management over a life cycle or wonder if it’s been solved or mitigated. Training them is pricey in many ways.